38 machine learning noisy labels

Noisy Labels: Theoretical Approaches/Empirical Studies We demonstrate that several proposed learning-with-noisy-labels solutions in the literature relate closely to negative label smoothing (NLS), which defines as using a negative weight to combine the hard and soft labels. We unify (positive) LS and NLS into GLS, and provide understandings for the properties of GLS when learning with noisy labels. PDF Learning with Noisy Labels - Carnegie Mellon University The theoretical machine learning community has also investigated the problem of learning from noisy labels. Soon after the introduction of the noise-freePAC model, Angluin and Laird [1988] proposed the random classification noise (RCN) model where each label is flipped independently with some probability ρ∈[0,1/2).

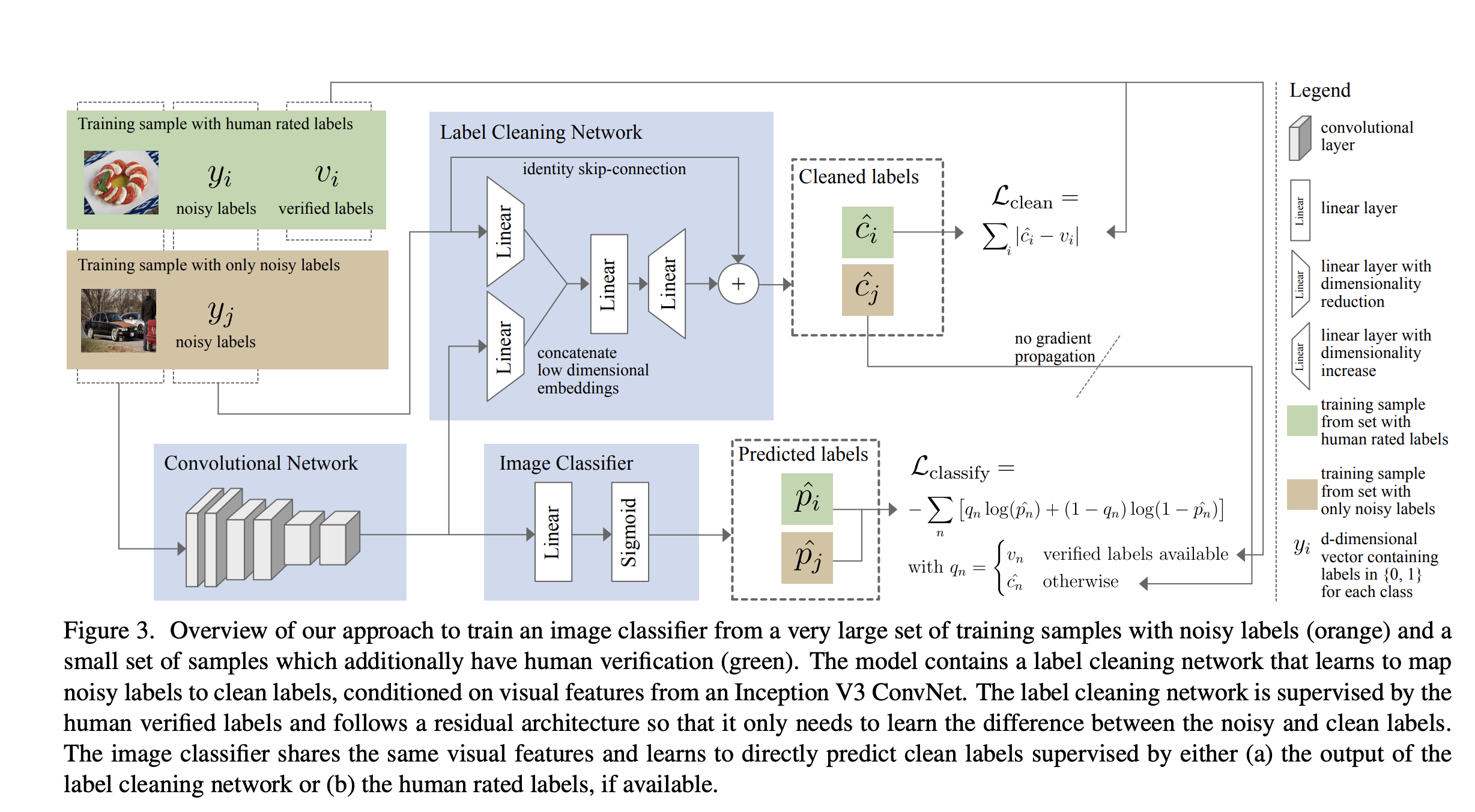

Understanding Deep Learning on Controlled Noisy Labels In "Beyond Synthetic Noise: Deep Learning on Controlled Noisy Labels", published at ICML 2020, we make three contributions towards better understanding deep learning on non-synthetic noisy labels. First, we establish the first controlled dataset and benchmark of realistic, real-world label noise sourced from the web (i.e., web label noise ...

Machine learning noisy labels

Dealing with noisy training labels in text ... - Stack Overflow Works with sklearn/pyTorch/Tensorflow/FastText/etc. lnl = LearningWithNoisyLabels (clf=LogisticRegression ()) lnl.fit (X = X_train_data, s = train_noisy_labels) # Estimate the predictions you would have gotten by training with *no* label errors. predicted_test_labels = lnl.predict (X_test) An Introduction to Classification Using Mislabeled Data The performance of any classifier, or for that matter any machine learning task, depends crucially on the quality of the available data. Data quality in turn depends on several factors- for example accuracy of measurements (i.e. noise), presence of important information, absence of redundant information, how much collected samples actually represent the population, etc. How to Improve Deep Learning Model Robustness by Adding Noise Keras supports the addition of noise to models via the GaussianNoise layer. This is a layer that will add noise to inputs of a given shape. The noise has a mean of zero and requires that a standard deviation of the noise be specified as a parameter. For example: 1 2 3 4 # import noise layer from keras.layers import GaussianNoise

Machine learning noisy labels. How to handle noisy labels for robust learning from uncertainty Most deep neural networks (DNNs) are trained with large amounts of noisy labels when they are applied. As DNNs have the high capacity to fit any noisy labels, it is known to be difficult to train DNNs robustly with noisy labels. These noisy labels cause the performance degradation of DNNs due to the memorization effect by over-fitting. Data Noise and Label Noise in Machine Learning | by Till ... Jul 01, 2021 · Asymmetric Label Noise All Labels Randomly chosen α% of all labels i are switched to label i + 1, or to 0 for maximum i (see Figure 3). This follows the real-world scenario that labels are randomly corrupted, as also the order of labels in datasets is random [6]. 3 — Own image: asymmetric label noise Asymmetric Label Noise Single Label Using Noisy Labels to Train Deep Learning Models on Satellite Imagery The goal of the project was to detect buildings in satellite imagery using a semantic segmentation model. We trained the model using labels extracted from Open Street Map (OSM), which is an open source, crowd-sourced map of the world. The labels generated from OSM contain noise — some buildings are missing, and others are poorly aligned with ... PDF Label Distribution for Learning with Noisy Labels - IJCAI become noisy labels. Thus, designing algorithms that deal with noisy labels is of great importance for learning robust DNNs. However, it is difficult to distinguish between noisy labels and clean la-bels, which becomes the bottleneck of many meth-ods. To address the problem, this paper proposes a novel method named Label Distribution based

Noisy Labels in Remote Sensing Annotating RS images with multi-labels at large-scale to drive DL studies is time consuming, complex, and costly in operational scenarios. To address this issue, existing thematic products (e.g., Corine Land-Cover map) can be used, however the land-use and land-cover labels through these products can be incomplete and noisy. Handling data with incomplete and noisy labels may result in ... Understanding and Utilizing Deep Neural Networks Trained with Noisy Labels %0 Conference Paper %T Understanding and Utilizing Deep Neural Networks Trained with Noisy Labels %A Pengfei Chen %A Ben Ben Liao %A Guangyong Chen %A Shengyu Zhang %B Proceedings of the 36th International Conference on Machine Learning %C Proceedings of Machine Learning Research %D 2019 %E Kamalika Chaudhuri %E Ruslan Salakhutdinov %F pmlr-v97-chen19g %I PMLR %P 1062--1070 %U https ... PDF Learning with Noisy Labels - NeurIPS The theoretical machine learning community has also investigated the problem of learning from noisy labels. Soon after the introduction of the noise-freePAC model, Angluin and Laird [1988] proposed the random classification noise (RCN) model where each label is flipped independently with some probability ρ∈[0,1/2). Deep learning with noisy labels: Exploring techniques and remedies in ... Deep learning with noisy labels: Exploring techniques and remedies in medical image analysis Abstract Supervised training of deep learning models requires large labeled datasets. There is a growing interest in obtaining such datasets for medical image analysis applications. However, the impact of label noise has not received sufficient attention.

An instance-dependent simulation framework for learning with label noise We propose a simulation framework for generating instance-dependent noisy labels via a pseudo-labeling paradigm. We show that the distribution of the synthetic noisy labels generated with our framework is closer to human labels compared to independent and class-conditional random flipping. Equipped with controllable label noise, we study the negative impact of noisy labels across a few ... Learning from Noisy Labels with Deep Neural Networks: A Survey As noisy labels severely degrade the generalization performance of deep neural networks, learning from noisy labels (robust training) is becoming an important task in modern deep learning applications. In this survey, we first describe the problem of learning with label noise from a supervised learning perspective. [P] Noisy Labels and Label Smoothing : MachineLearning - reddit It's safe to say it has significant label noise. Another thing to consider is things like dense prediction of things such as semantic classes or boundaries for pixels over videos or images. By their very nature classes may be subjective, and different people may label with different acuity, add to this the class imbalance problem. level 1 Robustness and Reliability When Training With Noisy Labels Labelling of data for supervised learning can be costly and time-consuming and the risk of incorporating label noise in large data sets is imminent. When training a flexible discriminative model using a strictly proper loss, such noise will inevitably shift the solution towards the conditional distribution over noisy labels.

Meta-learning from noisy labels :: Päpper's Machine Learning ... Label noise introduction Training machine learning models requires a lot of data. Often, it is quite costly to obtain sufficient data for your problem. Sometimes, you might even need domain experts which don't have much time and are expensive. One option that you can look into is getting cheaper, lower quality data, i.e. have less experienced people annotate data. This usually has the ...

PDF Cost-Sensitive Learning with Noisy Labels Learning from noisy training data is a problem of theoretical as well as practical interest in machine learning. In many applications such as learning to classify images, it is often the case that the labels are noisy. Even human labelers are susceptible to errors in labeling; for instance, certain image categories may be hard to discern.

machine learning - Classification with noisy labels? - Cross Validated Let p t be a vector of class probabilities produced by the neural network and ℓ ( y t, p t) be the cross-entropy loss for label y t. To explicitly take into account the assumption that 30% of the labels are noise (assumed to be uniformly random), we could change our model to produce p ~ t = 0.3 / N + 0.7 p t instead and optimize

Title: Compressing Features for Learning with Noisy Labels Abstract: Supervised learning can be viewed as distilling relevant information from input data into feature representations. This process becomes difficult when supervision is noisy as the distilled information might not be relevant. In fact, recent research shows that networks can easily overfit all labels including those that are corrupted, and hence can hardly generalize to clean datasets.

subeeshvasu/Awesome-Learning-with-Label-Noise - GitHub 2021-IJCAI - Towards Understanding Deep Learning from Noisy Labels with Small-Loss Criterion. 2022-WSDM - Towards Robust Graph Neural Networks for Noisy Graphs with Sparse Labels. 2022-Arxiv - Multi-class Label Noise Learning via Loss Decomposition and Centroid Estimation.

Compressing Features for Learning with Noisy Labels Supervised learning can be viewed as distilling relevant information from input data into feature representations. This process becomes difficult when supervision is noisy as the distilled information might not be relevant. In fact, recent research shows that networks can easily overfit all labels including those that are corrupted, and hence can hardly generalize to clean datasets. In this ...

How Noisy Labels Impact Machine Learning Models - KDnuggets Apr 06, 2021 · While this study demonstrates that ML systems have a basic ability to handle mislabeling, many practical applications of ML are faced with complications that make label noise more of a problem. These complications include: Not being able to create very large training sets, and Systematic labeling errors that confuse machine learning.

How Noisy Labels Impact Machine Learning Models | iMerit Mar 29, 2021 · Supervised Machine Learning requires labeled training data, and large ML systems need large amounts of training data. Labeling training data is resource intensive, and while techniques such as crowd sourcing and web scraping can help, they can be error-prone, adding ‘label noise’ to training sets.

Constrained Reweighting for Training Deep Neural Nets with Noisy Labels We formulate a novel family of constrained optimization problems for tackling label noise that yield simple mathematical formulae for reweighting the training instances and class labels. These formulations also provide a theoretical perspective on existing label smoothing-based methods for learning with noisy labels. We also propose ways for ...

How to Improve Deep Learning Model Robustness by Adding Noise Keras supports the addition of noise to models via the GaussianNoise layer. This is a layer that will add noise to inputs of a given shape. The noise has a mean of zero and requires that a standard deviation of the noise be specified as a parameter. For example: 1 2 3 4 # import noise layer from keras.layers import GaussianNoise

An Introduction to Classification Using Mislabeled Data The performance of any classifier, or for that matter any machine learning task, depends crucially on the quality of the available data. Data quality in turn depends on several factors- for example accuracy of measurements (i.e. noise), presence of important information, absence of redundant information, how much collected samples actually represent the population, etc.

Dealing with noisy training labels in text ... - Stack Overflow Works with sklearn/pyTorch/Tensorflow/FastText/etc. lnl = LearningWithNoisyLabels (clf=LogisticRegression ()) lnl.fit (X = X_train_data, s = train_noisy_labels) # Estimate the predictions you would have gotten by training with *no* label errors. predicted_test_labels = lnl.predict (X_test)

Post a Comment for "38 machine learning noisy labels"